Pixel Pandemonium

Talking Heads - Road to Nowhere

Whatever you now find weird, ugly, uncomfortable and nasty about a new medium will surely become its signature. CD distortion, the jitteriness of digital video, the crap sound of 8-bit - all of these will be cherished and emulated as soon as they can be avoided. It’s the sound of failure: so much modern art is the sound of things going out of control, of a medium pushing to its limits and breaking apart. The distorted guitar sound is the sound of something too loud for the medium supposed to carry it. The blues singer with the cracked voice is the sound of an emotional cry too powerful for the throat that releases it. The excitement of grainy film, of bleached-out black and white, is the excitement of witnessing events too momentous for the medium assigned to record them.

-Brian Eno — A Year With Swollen Appendices, 1996

Lately I’ve been drawn back to the fundamental mechanisms that have shaped images and video for decades. Algorithms that operate directly on raw data, where glitches emerge from the failure points of the medium itself, compression blocks, quantization errors, bitplanes, buffer overflows or frame interpolation breaking down.

Emergence is at the center of this fascination. The artifacts produced by datamoshing aren’t just errors; they’re the result of an emergent system, much like cellular automata or other rule-based simulations. A corrupted motion vector doesn’t just break one frame. It cascades, propagates, evolves across the sequence in ways you can’t fully predict.

It’s not optimized for output, you don’t describe what you want and watch it materialize. It’s built for exploration. The craft is in tuning the system and adjusting the parameters, choosing the right input, knowing when to stop.

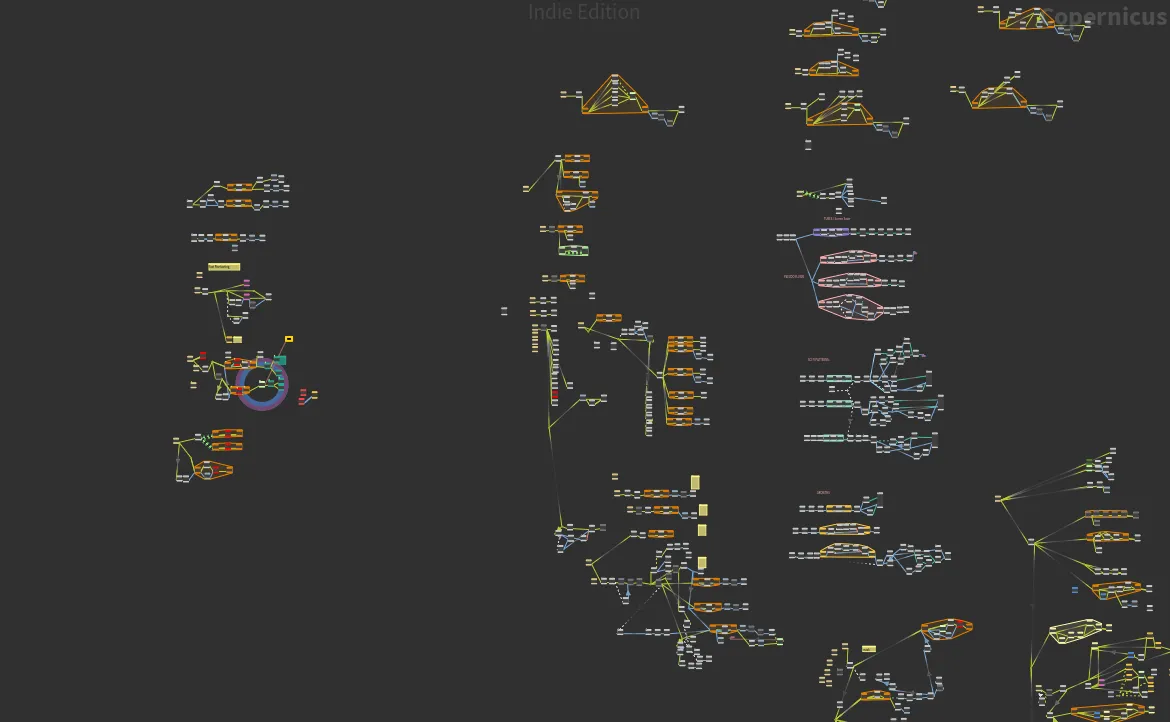

So I decided to build tools to explore the algorithms I love using Houdini COPs. Due to the procedural nature of Houdini, pixelsorting, datamoshing, seam carving, cellular automata, and dithering act as building blocks in larger systems, not just presets to apply.

Datamoshing

There is something about seeing a video fail, revealing the system underneath. Since Datamoshing originates from encoding errors, which we don’t have access to in COPs, this implementation is trying to replicate the effect as closely as possible. Block Matching is used to generate Motion Vectors from any input video, which quantify the movement of pixel blocks between frames. These Motion Vectors are then used to advect the frozen frame’s pixels, causing the characteristic distortion.

Fatboy Slim ft. Bootsy Collins - Weapon Of Choice

In the video above you can see the Motion Vectors interlaced with the result, but ofcourse the algorithm also works with rendered CG Motion Vectors.

Pixelsorting

My love for pixelsorting started in 2020 when I created a webapp for a course in my bachelor studies. The sorted space itself fascinates me. Each sorting method be it brightness, hue, saturation or chroma reveals a different dimension hidden in the data. COPs is the ideal playground to extend this “research” into a more procedural playground, which allows for a lot of experimentation.

Checkered two way Bubble Sort

Exploring dimensions

It’s incredible that the first mention of pixelsorting comes from a 1988 paper written in FORTRAN. researchers proposed clustering pixels in “sorted space” and transforming them back for image analysis. The idea of transforming the images back after sorting never occurred to me, but it lends itself to really interesting applications. Apply a blur in sorted space and you’re no longer blurring neighbors in 2D, you’re blending pixels that share similar values across the entire image. What was spatial becomes statistical. You might have a lot of philosophical questions now, like: What does it mean for pixels to be “related”, or for colors to be adjacent? Can I rearrange the pixels to show a different image? Why should I give a shit?

Sorting images with different functions, sharpening then transforming pixels back

Blending Bubble Sort with the next Video Frame

Scatter Gather

While I love seeing the process of image pixels getting sorted, sometimes you want the fully sorted look as fast as possible.

Falling Sand

Inspired by a music video from Ohneotrix’s newest album, I implemented a falling-sand simulation in OpenCL using cellular automata. It’s a two-layered system: one layer simulates the materials, and the other displaces the input texture based on that simulation. In the second video, you can see the simulation activate row by row, revealing both the material layer and the image layer underneath.

Right now it supports ten materials with different behaviors, which puts me at roughly 2.15% of Noita’s 460+ materials.

There’s still a lot more to explore, bitplane editing, dithering, quantization… but that is for another time.